neuro-symbolic AI

What is neuro-symbolic AI?

Neuro-symbolic AI combines neural networks with rules-based symbolic processing techniques to improve artificial intelligence systems' accuracy, explainability and precision. The neural aspect involves the statistical deep learning techniques used in many types of machine learning. The symbolic aspect points to the rules-based reasoning approach that's commonly used in logic, mathematics and programming languages.

Combining neural and symbolic AI requires careful calibration

The combination of neural and symbolic approaches has reignited a long-simmering debate in the AI community about the relative merits of symbolic approaches (e.g., if-then statements, decision trees, mathematics) and neural approaches (e.g., deep learning and, more recently, generative AI).

Most machine learning techniques employ various forms of statistical processing. In neural networks, the statistical processing is widely distributed across numerous neurons and interconnections, which increases the effectiveness of correlating and distilling subtle patterns in large data sets. On the other hand, neural networks tend to be slower and require more memory and computation to train and run than other types of machine learning and symbolic AI.

For much of the AI era, symbolic approaches held the upper hand in adding value through apps including expert systems, fraud detection and argument mining. But innovations in deep learning and the infrastructure for training large language models (LLMs) have shifted the focus toward neural networks.

Now, new training techniques in generative AI (GenAI) models have automated much of the human effort required to build better systems for symbolic AI. But these more statistical approaches tend to hallucinate, struggle with math and are opaque.

Building better AI will require a careful balance of both approaches.

Psychologist Daniel Kahneman suggested that neural networks and symbolic approaches correspond to System 1 and System 2 modes of thinking and reasoning. System 1 thinking, as exemplified in neural AI, is better suited for making quick judgments, such as identifying a cat in an image. System 2 analysis, exemplified in symbolic AI, involves slower reasoning processes, such as reasoning about what a cat might be doing and how it relates to other things in the scene.

Fundamentals of neural networks

AI neural networks are modeled after the statistical properties of interconnected neurons in the human brain and brains of other animals. These artificial neural networks (ANNs) create a framework for modeling patterns in data represented by slight changes in the connections between individual neurons, which in turn enables the neural network to keep learning and picking out patterns in data. This can help tease apart features at different levels of abstraction. In the case of images, this could include identifying features such as edges, shapes and objects.

Some proponents have suggested that if we set up big enough neural networks and features, we might develop AI that meets or exceeds human intelligence. However, others, such as anesthesiologist Stuart Hameroff and physicist Roger Penrose, note that these models don't necessarily capture the complexity of intelligence that might result from quantum effects in biological neurons.

Popular categories of ANNs include convolutional neural networks (CNNs), recurrent neural networks (RNNs) and transformers. CNNs are good at processing information in parallel, such as the meaning of pixels in an image. RNNs better interpret information in a series, such as text or speech. New GenAI techniques often use transformer-based neural networks that automate data prep work in training AI systems such as ChatGPT and Google Gemini.

One big challenge is that all these tools tend to hallucinate. Concerningly, some of the latest GenAI techniques are incredibly confident and predictive, confusing humans who rely on the results. This problem is not just an issue with GenAI or neural networks, but, more broadly, with all statistical AI techniques.

Neural networks and other statistical techniques excel when there is a lot of pre-labeled data, such as whether a cat is in a video. However, they struggle with long-tail knowledge around edge cases or step-by-step reasoning.

Fundamentals of symbolic reasoning

Symbolic reasoning is a hallmark of all modern programming languages, where programmers say, "If this condition is met, then do that." Symbolic AI is a subset of machine learning that uses a combination of logical and mathematical processing to reason, make decisions and transform data into a more useful format. Symbolic processes are also at the heart of use cases such as solving math problems, improving data integration and reasoning about a set of facts.

Common symbolic AI algorithms include expert systems, logic programming, semantic networks, Bayesian networks and fuzzy logic. These algorithms are used for knowledge representation, reasoning, planning and decision-making. They work well for applications with well-defined workflows, but struggle when apps are trying to make sense of edge cases.

For example, AI developers created many rule systems to characterize the rules people commonly use to make sense of the world. This resulted in AI systems that could help translate a particular symptom into a relevant diagnosis or identify fraud.

However, this also required much manual effort from experts tasked with deciphering the chain of thought processes that connect various symptoms to diseases or purchasing patterns to fraud. This downside is not a big issue with deciphering the meaning of children's stories or linking common knowledge, but it becomes more expensive with specialized knowledge.

Once they are built, symbolic methods tend to be faster and more efficient than neural techniques. They are also better at explaining and interpreting the AI algorithms responsible for a result.

Symbolic techniques were at the heart of the IBM Watson DeepQA system, which beat the best human at answering trivia questions in the game Jeopardy! However, this also required much human effort to organize and link all the facts into a symbolic reasoning system, which did not scale well to new use cases in medicine and other domains.

Integrating neural and symbolic AI architectures

Neuro-symbolic AI can be characterized as a spectrum. Some AI approaches are purely symbolic. However, virtually all neural models consume symbols, work with them or output them. For example, a neural network for optical character recognition (OCR) translates images into numbers for processing with symbolic approaches. Generative AI apps similarly start with a symbolic text prompt and then process it with neural nets to deliver text or code.

The excitement within the AI community lies in finding better ways to tinker with the integration between symbolic and neural network aspects. For example, DeepMind's AlphaGo used symbolic techniques to improve the representation of game layouts, process them with neural networks and then analyze the results with symbolic techniques. Other potential use cases of deeper neuro-symbolic integration include improving explainability, labeling data, reducing hallucinations and discerning cause-and-effect relationships.

University of Rochester professor Henry Kautz suggested six approaches for integrating neural and symbolic components into AI systems. Others have recommended adding graph neural networks. Here is an overview of these integration techniques:

- Symbols in and out. Symbols are fed into an AI system and processed to create new symbols. For example, generative AI apps start with a symbolic text prompt and process it with neural nets to deliver text or code.

- Symbolic analysis. The neural network is integrated with a symbolic problem-solver. For example, AlphaGo neural networks generate game moves that are evaluated using symbolic techniques such as Monte Carlo algorithms.

- Neural structuring. The neural network transforms raw data into symbols that are processed by symbolic algorithms. For example, OCR translates a document into text, numbers and entities that are categorized with symbolic methods and entered into an enterprise resource planning system.

- Symbolic labeling. Symbolic techniques generate and label training data for neural networks -- for example, automatically generating new math problems and solutions similar to examples in a format better suited for a neural network.

- Symbolic neural generation. A set of symbolic rules is mapped to an embedding scheme used to generate a neural network. For example, Logic Tensor Networks use symbolic techniques to transform raw data into an intermediate format better suited for training neural networks to cluster data, learn relationships, answer queries and classify across multiple labels.

- Full integration. A neural network calls on a symbolic reasoning engine, and the results prime the next stage of neural processing. For example, ChatGPT queries Mathematica via a plugin for solving a math problem, which refines the next phase of ChatGPT processing in responding to a question. Autonomous AI agent research is exploring how these kinds of workflows might be automatically called and chained across various domain-specific AI agents.

- Graph neural networks. These use neural networks to glean relationships from complex systems, such as molecules and social networks, to improve processing with symbolic reasoning and mathematical techniques.

Short history of neuro-symbolic AI

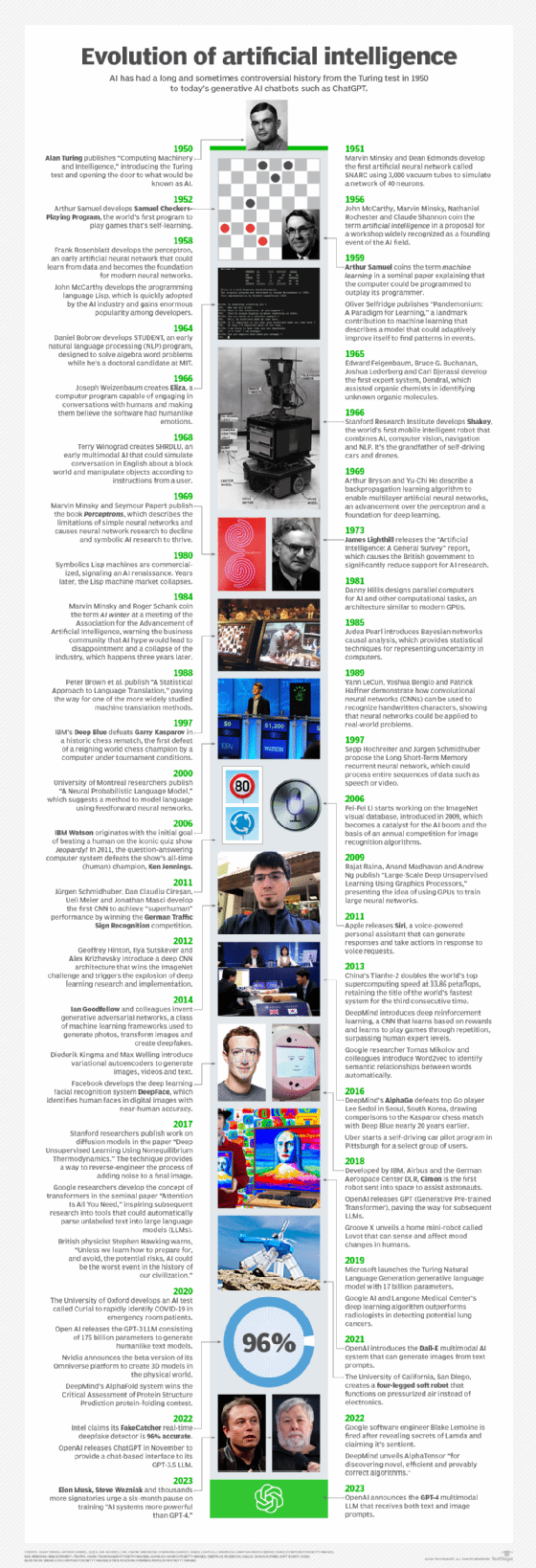

Both symbolic and neural network approaches date back to the earliest days of AI in the 1950s. On the symbolic side, the Logic Theorist program in 1956 helped solve simple theorems. The Perceptron algorithm in 1958 could recognize simple patterns on the neural network side. However, neural networks fell out of favor in 1969 after AI pioneers Marvin Minsky and Seymour Papert published a paper criticizing their ability to learn and solve complex problems.

Over the next few decades, research dollars flowed into symbolic methods used in expert systems, knowledge representation, game playing and logical reasoning. However, interest in all AI faded in the late 1980s as AI hype failed to translate into meaningful business value. Symbolic AI emerged again in the mid-1990s with innovations in machine learning techniques that could automate the training of symbolic systems, such as hidden Markov models, Bayesian networks, fuzzy logic and decision tree learning.

Innovations in backpropagation in the late 1980s helped revive interest in neural networks. This helped address some of the limitations in early neural network approaches, but did not scale well. The discovery that graphics processing units could help parallelize the process in the mid-2010s represented a sea change for neural networks. Google announced a new architecture for scaling neural network architecture across a computer cluster to train deep learning algorithms, leading to more innovation in neural networks.

Early deep learning systems focused on simple classification tasks like recognizing cats in videos or categorizing animals in images. However, innovations in GenAI techniques such as transformers, autoencoders and generative adversarial networks have opened up a variety of use cases for using generative AI to transform unstructured data into more useful structures for symbolic processing. Now, researchers are looking at how to integrate these two approaches at a more granular level for discovering proteins, discerning business processes and reasoning.

Benefits of neuro-symbolic AI

Researchers are exploring different ways to integrate neural and symbolic techniques to exploit each other's strengths. Symbolic techniques are faster and more precise, but harder to train. Neural techniques are better at distilling subtle patterns from large data sets, but are more prone to hallucination. Here are some of the benefits of combining these approaches:

- Explainability. Symbolic methods can help identify relevant factors associated with a particular neural result. This semantic distillation is at the heart of many explainable AI tools.

- Automated data labeling. Neural techniques can help label data to train more efficient symbolic processing algorithms.

- Hallucination mitigation. Symbolic techniques can double-check neural network results to identify inaccuracies.

- Structuring data. Neural techniques can transform unstructured data from documents and images for use by symbolic techniques.

- Prioritization. Neural networks can prioritize using multiple symbolic algorithms or AI agents to improve efficiency.

Challenges

Some of the top challenges in building neuro-symbolic AI systems include the following:

- Knowledge representation. Symbolic and neural approaches represent various aspects of the world differently, which requires translation back and forth.

- Integration. There are various design patterns for integrating neural and symbolic elements, which can have different effects on their performance, accuracy and utility.

- Hallucination. Symbolic systems primed with inaccurate information can be prone to poor results.

- Model drift. Both symbolic and neural approaches are susceptible to changes in data over time at various rates and in diverse ways. As a result, care must be given when updating each aspect separately or together.

- Autonomous AI. New agent AI systems can contain numerous symbolic and neural network algorithms, which could create unforeseen problems as individual agents are connected into larger workflows.

Applications of neuro-symbolic AI

Here are some examples where combining neural networks and symbolic AI could improve results:

- Drug discovery. Symbolic AI translates chemical structures for more efficient neural processing.

- Autonomous vehicles. Neural networks translate camera data into a 3D representation for analysis with symbolic techniques.

- Intelligent documents. Neural networks are used to identify numbers, entities and categories to improve processing by symbolic techniques.

- Financial fraud detection. Neural networks identify subtle patterns associated with fraud that are used to generate and label more examples for training faster symbolic algorithms.

- Recommender systems. Symbolic AI helps structure domain knowledge into the recommender process to help tune the neural network.

Future directions

The research community is still in the early phase of combining neural networks and symbolic AI techniques. Much of the current work considers these two approaches as separate processes with well-defined boundaries, such as using one to label data for the other. The next wave of innovation will involve combining both techniques more granularly. This is a much harder problem to solve.

For example, AI models might benefit from combining more structural information across various levels of abstraction, such as transforming a raw invoice document into information about purchasers, products and payment terms. An internet of things stream could similarly benefit from translating raw time-series data into relevant events, performance analysis data, or wear and tear. Future innovations will require exploring and finding better ways to represent all of these to improve their use by symbolic and neural network algorithms.

Another area of innovation will be improving the interpretability and explainability of large language models common in generative AI. While LLMs can provide impressive results in some cases, they fare poorly in others. Improvements in symbolic techniques could help to efficiently examine LLM processes to identify and rectify the root cause of problems.